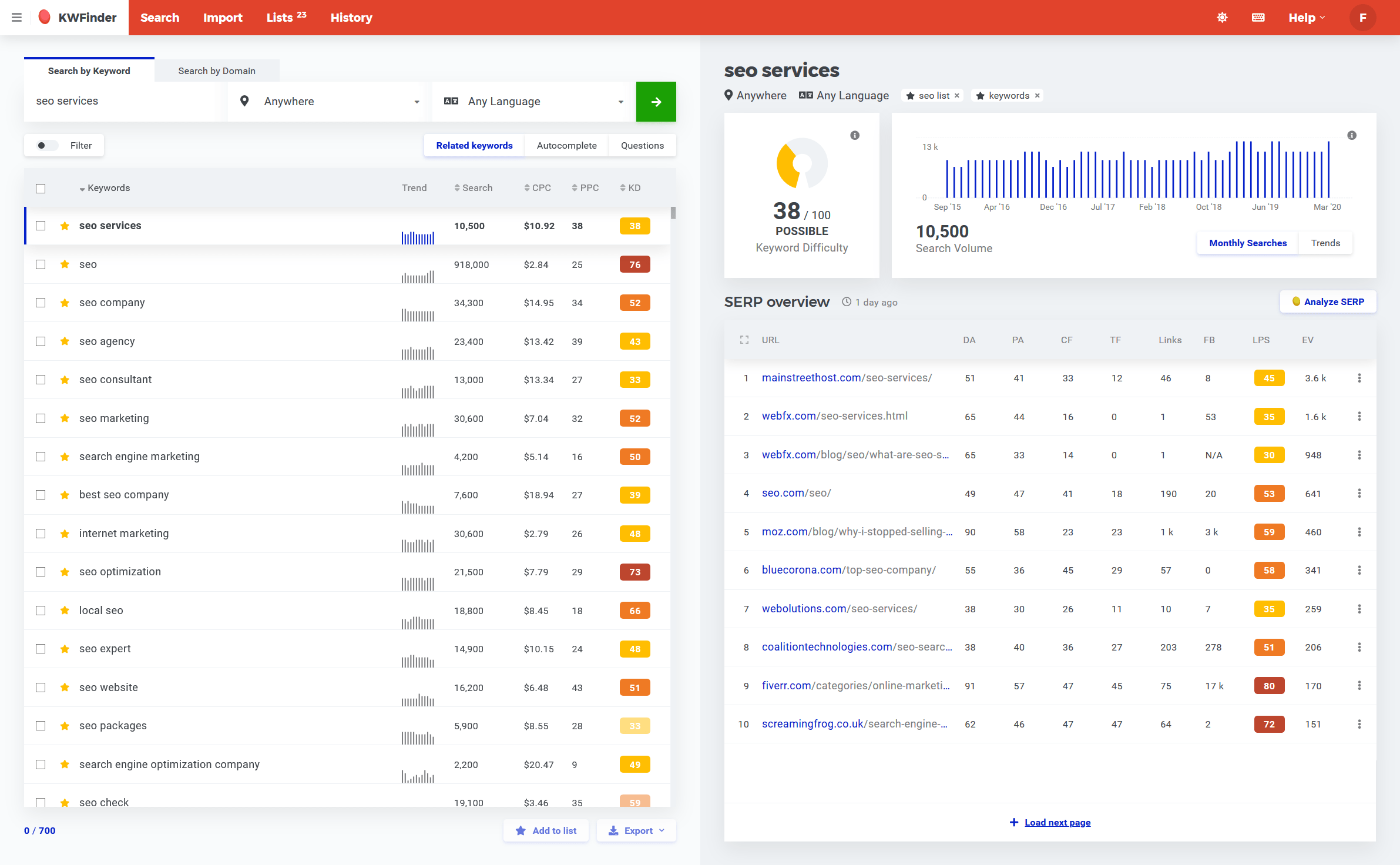

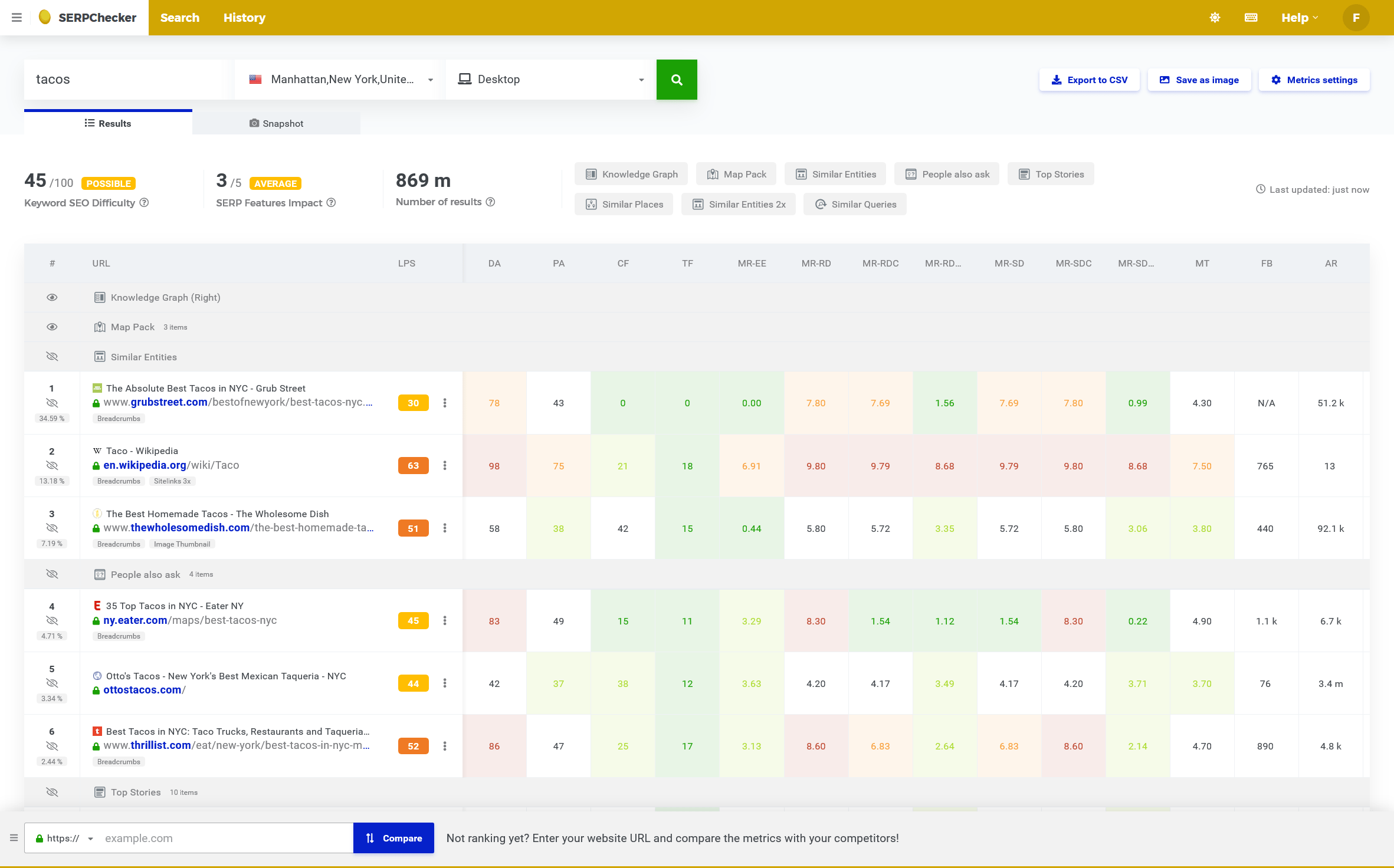

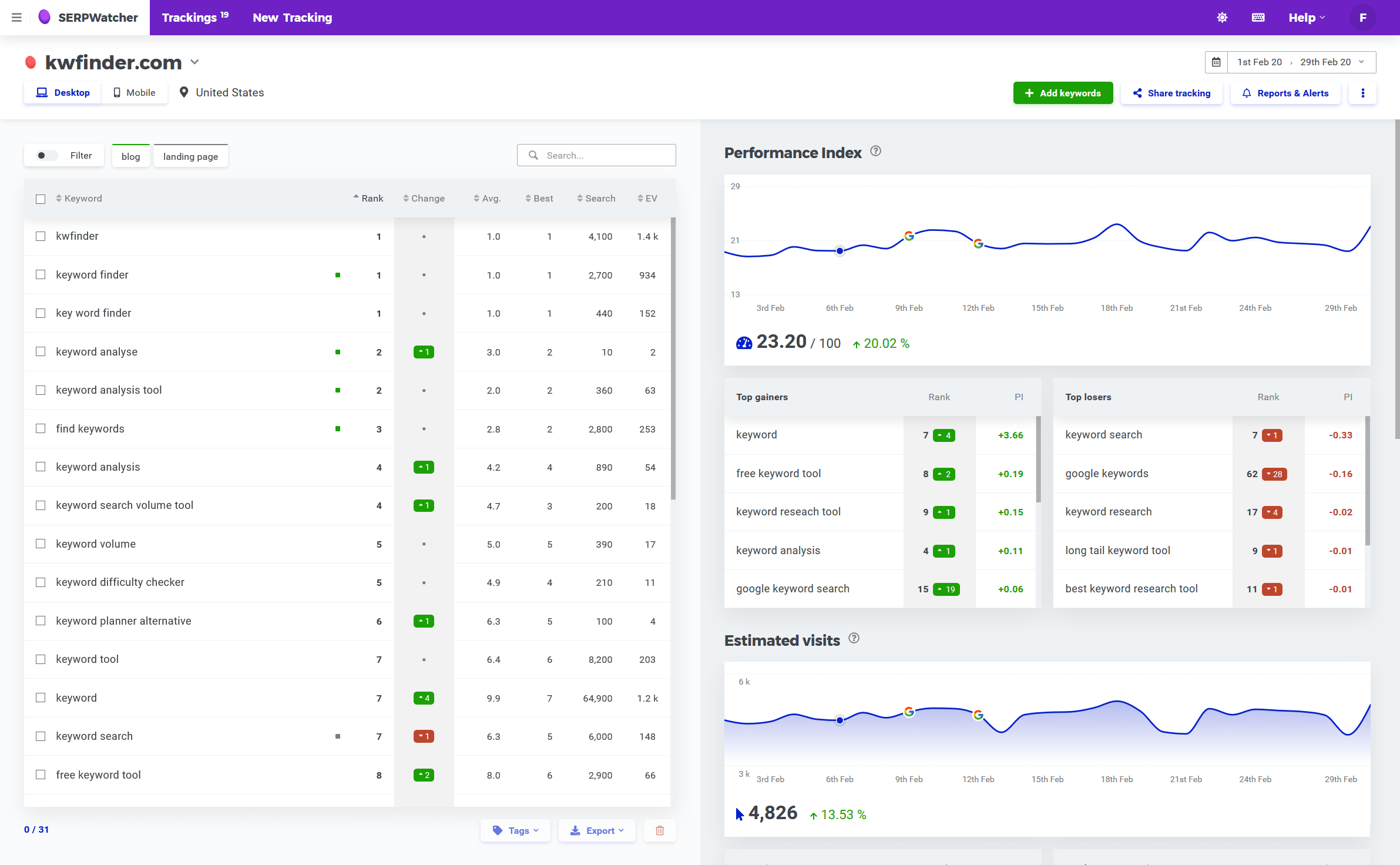

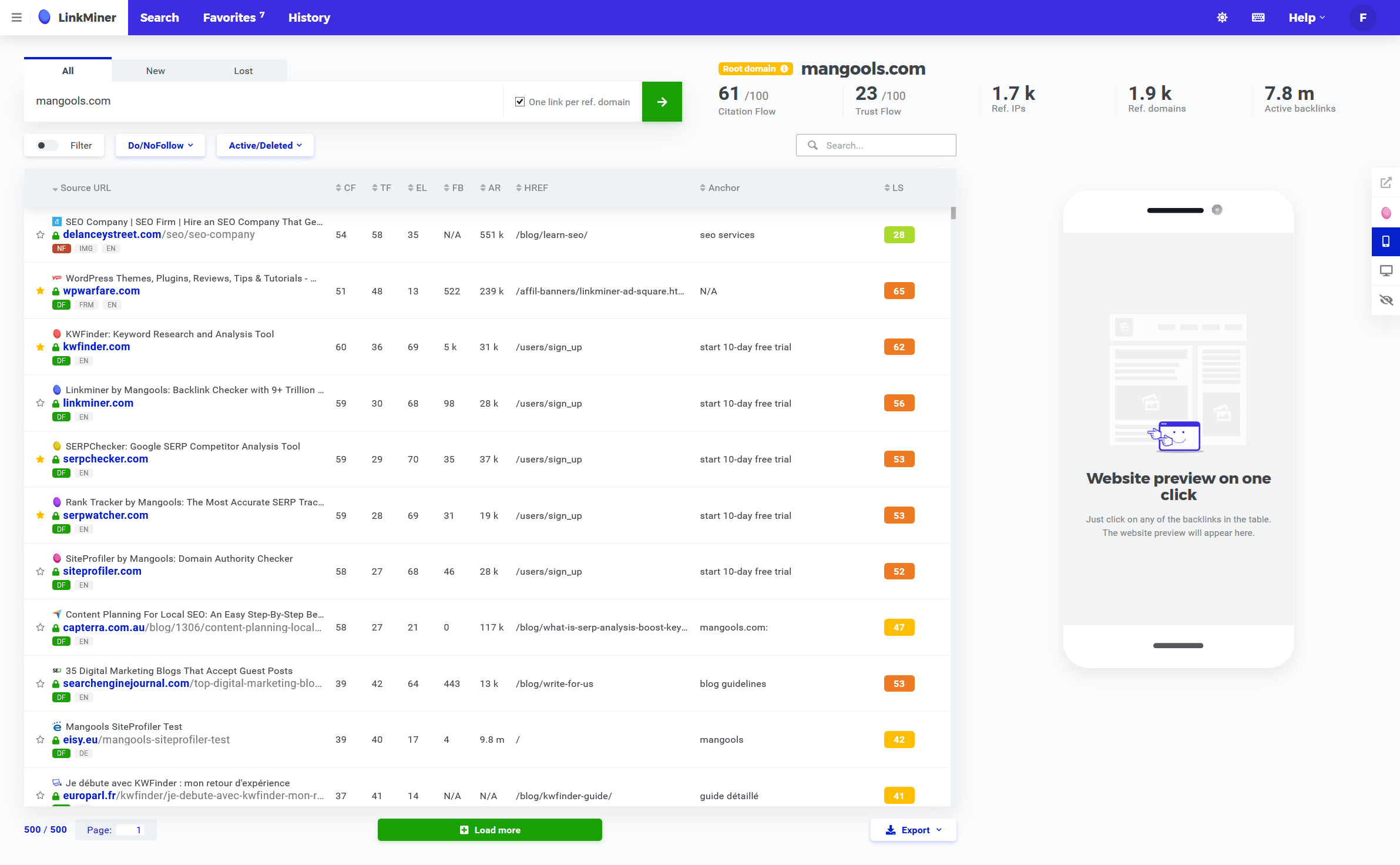

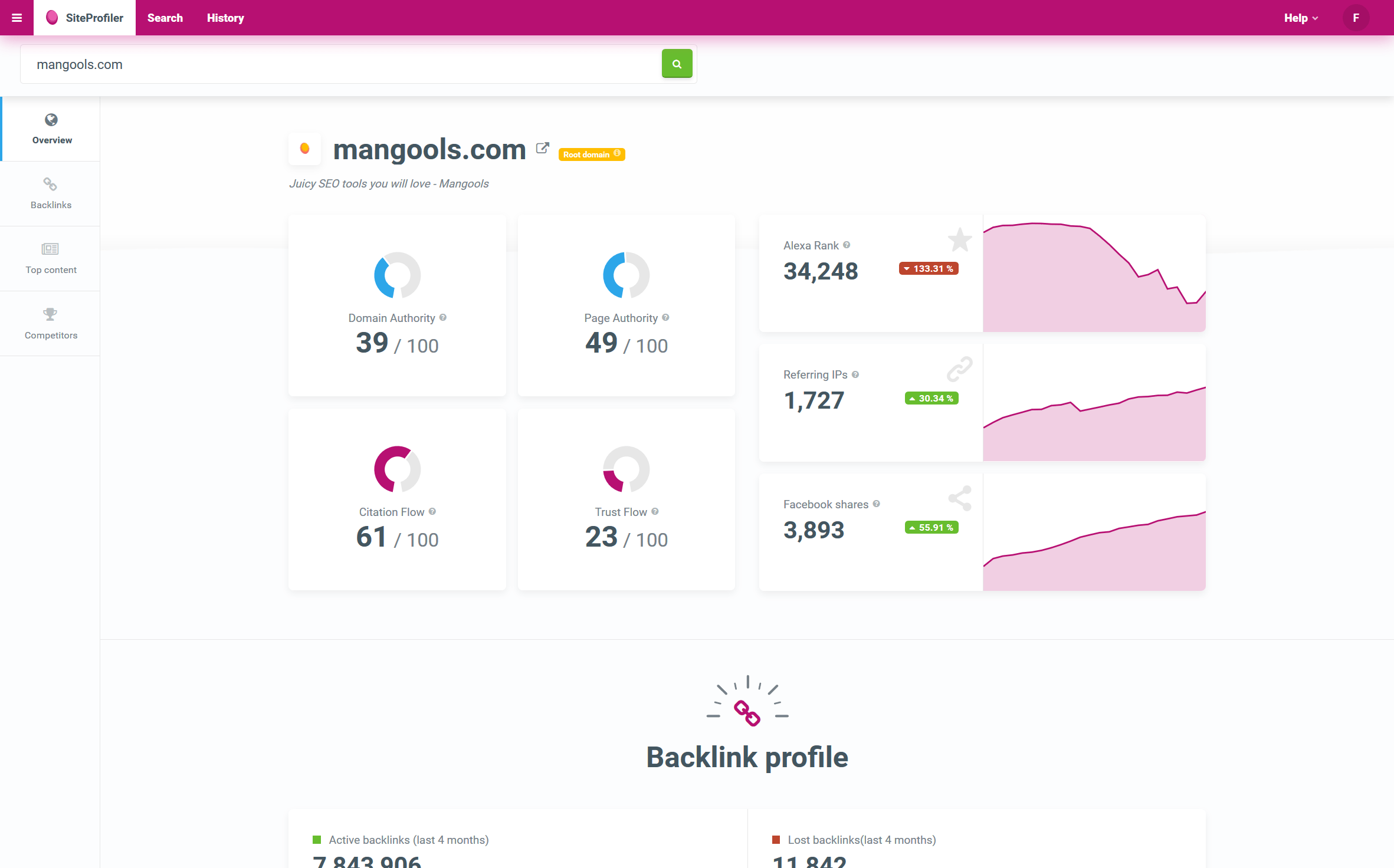

Juicy SEO tools you will love

Making SEO Simple since 2014.

Why people love Mangools

Ease of use and great UI

You don't have to be an expert to start using our SEO tools. You'll understand all the data and features instantly.

Support with SEO skills

Unlike typical outsourced support agents, our support team consists of people actually doing SEO every day.

Best value for money

A good SEO toolset doesn't have to cost a fortune. We're no giant all-in-one tool, but you'll get all the features you need.

Trusted by the big ones, loved by everyone

It's got a seamless interface, powerful features, and beautiful design, which makes it a great choice especially for beginner bloggers.

Gael Breton

AuthorityHacker.com

Mangools is much more than just software - you also get access to an amazing team of people with real knowledge in SEO.

Julia McCoy

ContentHacker.com

At $29/month you really can't go wrong with a Mangools subscription. Great value!

Brian Dean

Backlinko.com